Unity Android - An optimization retrospective

02 Apr 2017I’ve been working on Flood for a few weeks now. It’s been fun to finally call something complete and actually be proud of it. The project started as something simple that I could polish like crazy and use as a portfolio piece. Because of this I consider the game’s performance to be incredibly important. As a result I’ve made several performance passes on the game after the initial prototype.

This post is mostly going to be a post mortem. I’m going to describe the iterations I’ve gone through, and outline what I think went right and wrong with each one.

| Parts | Desktop | Laptop |

|---|---|---|

| CPU | i5-7500 | i7-4710MQ |

| RAM | 16GB | 8GB |

| GPU | R9 380 | GTX 860M |

Development was done partially on my desktop and laptop. Both are significantly more powerful than even the most high-end phones, let alone your average phone, so during development I did not have any real performance issues for the most part. However, performance dropped off a cliff when I started testing on mobile the first time.

| Grid Size | Unity UI | Individual Quads | Single Static Mesh |

|---|---|---|---|

| 24x24 | <5fps | 20-60fps | 60fps |

| 36x36 | - | 5-10fps | 60fps |

This post is going to be mostly about my approaches to the game’s core gameplay element - the grid. The tiles in this grid could animate in size and color, but this could be turned off by the player. The colors used could be changed to accomodate people with color blindness, and to aid this each color also had an accompanying icon with adjustable opacity. The user could also change the grid size for every match.

Initially I had capped the grid size at 24x24, but some of the first testers I got requested I increase this number. Unfortunately, when I first tried to bump it up to 36x36 the game just ground to a halt. This was my cue to invest some serious time and effort into getting used to Unity’s profiling tools.

Unity UI Grid; the naive approach.

My initial prototype was entire done with Unity’s UI system.

Yeah…

I knew from the start that I would most likely end up redoing most of my initial prototype. And don’t get me wrong. Unity’s UI system is awesome. It’s just in no way intended to deal with the things I asked of it. Of course it’s perfect for the game’s actual UI, but implementing the grid in the UI system is a terrible idea. Let me explain why.

Consider the following situation;

- We’re using a 24x24 grid of tiles. That’s 576 transforms.

- Each tile had a sprite for color (and possible texture), and a sprite for the overlayed icon.

- Using a custom layout component to position all tiles in a grid of specific dimensions.

- Every child was animated separately using the truly wonderful DOTween.

I felt early on that this approach would become a problem, and I would not get the desired control over layout and rendering to optimize it to a point I deemed acceptable. I did not profile this thoroughly at the time. I just noticed some very significant stuttering (on desktop!) whenever I pressed a new color. I opened up the profiler, and it showed 10+ms spikes that grew to 60+ms spikes every time the grid was changing color.

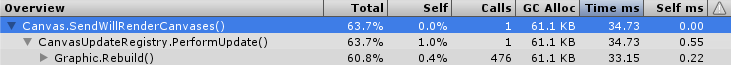

After watching this brilliant Unite 2016 presentation by Ian Dundore about content optimization I realised just how poorly optimized this approach was.

-

Everything was done in a single canvas.

I didn’t know exactly how big of a deal this was until I watched that presentation. Every time I changed a transform inside of a Canvas it would rebuild the entire canvas. That meant every UI element, and on a 24x24 grid all 576 tiles would be rebuilt. I thought, when writing it, that changing a recttransform’s scale was not included in this. I was wrong. My entire canvas geometry was rebuilt every single frame anything happened at all.

That’s pretty bad for performance.

-

Render order problems were ‘handled’ by setting sibling index to last.

UGUI renders transform in the order they are arranged in the hierarchy. Because of this, every scaling tile would be drawn over by any tile after it. To fix this, instead of messing with the render order for each CanvasRenderer component, I hacked it together by reordering all animating tiles to be the last children of their parent. Unfortunately this caused a lot of memory being shuffled around every time I did this. I didn’t do this every frame, only when you pressed a new color, but it still had some serious frametime spikes.

-

UGUI is rendered completely as transparent geometry.

This meant overdraw. Lots of it. Everything is rendered and nothing is culled. Your desktop GPU can handle a good amount of transparent rendering. Unfortunately, mobile GPUs are not quite as powerful when it comes to fill rates. My approach of having a separate sprite for the color+texture and a separate sprite for the icon meant every pixel was rendered to with transparency at least 3 times (including the game’s background). On your average phone this is pretty taxing.

Now, the draw call count for the entire game was hovering between 10-20 most of the time. It’s a good thing Unity is pretty good at batching UGUI elements so I don’t have to deal with a draw call for each sprite in the grid…

Individual Quads; the batching nightmare.

My first real implementation of the grid used a single quad for each tile, blending in the icon inside of the shader.

Their colors and icons were assigned to a MaterialPropertyBlock, which was applied every frame something changed.

All animations were done using DOTween. The transforms were scaled using their localScale.

This approach was very easy to work with. It allowed me a great deal of flexibility in experimenting and deciding on a final look for the grid’s animation. I could easily change a few lines of code to completely change which tiles animated in what way.

-

Impact of batching

I would wager that most experienced Unity developers will probably be aware of the difference between a renderer’s material and sharedMaterial properties.

As it says in their documentation; When changing a renderer’s material property, if it is used anywhere else it will clone the material and give that renderer a unique copy.

When changing something on a renderer’s sharedMaterial property it will apply to the one instance of that material, meaning it’s visible on every object using that material.

Assigning a MaterialPropertyBlock acts like changing the material property, only without creating a new material instance.

It makes it easy to use because you’re not dealing with all these material instances. Unfortunately, as you can read in a recent Unity blog, materials with a different MaterialPropertyBlock do not get batched together.

When I wrote this approach I did not have a lot of experience with the performance impact of batching. On a desktop GPU you can easily get away with a few thousand draw calls without too many issues, and I’d never really ran into this limitation. The initial 24x24 grid ran poorly with 576 draw calls, but it was still playable. When I turned it up to 36x36 it idled at or below 10fps. The increase from 576 to 1296 draw calls crippled performance. For mobile I would recommend double digit, or at maximum, low triple digit draw call counts. While talking about mobile optimization, a colleague brought up how they had a strict draw call limit of 100 while working on their mobile VR game. This seems like a good limit for the vast majority of mobile games.

-

Tweening all those quads

The main cause of the game feeling sluggish was not a low framerate. There was not a whole lot of movement on screen most of the time, so in general it was not terribly obvious. Unfortunately the very noticeable delay when pressing a color before anything would start changing was very obvious.

I’m going to gloss over my terrible flood fill implementation that took several milliseconds to execute. Mostly out of embarassment, but also because I’d like to keep this post about things I learned that (almost) anyone can learn from and use. Flood fills are not going to be relevant to the vast majority of readers. Seriously though, pick the right collection type…

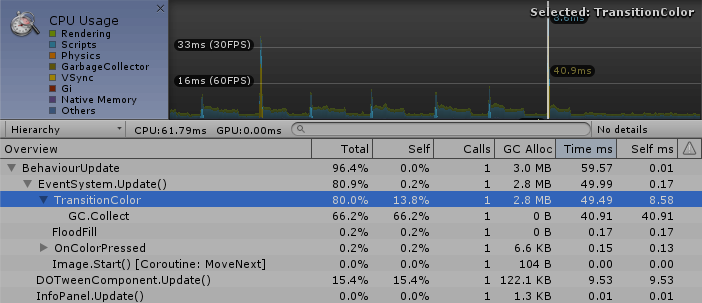

DOTween is great. It’s just not that great at pooling tweens that have to keep changing. My tweens were constantly offset by a few milliseconds in order to create the wave effect. The offsets combined with the changing target colors made it impossible to pool these tweens. At least, I never got it working properly. This resulted in every flood fill generating a ton of new tweening instances. That’s 2-3 megs of garbage every button press on a larger board. The garbage collector ends up having a field day and eating up 20-40ms or more of your frametime on desktop, depending on grid size, and significantly more on mobile.

Animations run smoothly at the start of a game, as I only animate the tiles that are changing. Towards the end of a game most tiles are changing and performance becomes quite dreadful. The screenshot above is of a 36x36 grid with around 90% of the board being filled and animated.

On desktop, creating the tweens takes up to like 10ms. Running them afterwards can go even higher than that at times. Again, however, this is significantly more on mobile. Especially lower end devices.

Single mesh; the solution.

The perfect solution to the draw call problem would be to only draw one object total. Luckily, the things I do with the grid are very simple. Tiles are a solid color, with an icon blended over top. Animations are colors interpolating and simple scaling of the quad. I found a way to combine all of this into a vertex and fragment shader, rendered using a single static mesh.

When selecting a new grid size for the first time that session the game creates a static mesh. This mesh is pooled, so you only have to generate it once. The mesh is just a grid of individual quads, with every quad having two UV channels. One channel. where the quad covers the entire 0-1 uv range, and one where all four vertices are a single point. That point is the tile’s grid position divided by 64.

64 is the maximum grid size. I chose this value because I did not want to limit the size of my tiles while writing the implementation. It wouldn’t be very hard to change later on, but I knew I would likely want grids bigger than 32x32, and that 64x64 would likely be too tiny to enjoy on phone screens. Power-of-two texture sizes tend to be more efficient to work with, so I just used 64x64 textures here.

The shader does everything it needs to using two textures (and an icon spritesheet). These textures serve as a lookup table for all the required data. They are standard RGBA textures that use point sampling, since they are sampled using the grid position saved in one of the uv channels. It is important that these textures are using point sampling to prevent any interpolated values from messing up everything.

The first texture contains information relevant to the vertex shader;

| Red | Tile scale bonus. With 0 being regular size, and 1 being maximum bonus size defined in the shader. |

| Green | Icon index. This simply acts as a UV offset for sampling the correct icon to blend on top. |

| Blue | Interpolation value. This is used to calculate interpolation. |

| Alpha | Interpolation delay. This is used to delay the interpolation done on this tile. |

The second texture contains information relevant to the fragment shader;

| RGB | Tile color. Simply the solid color applied to the tile. |

| Alpha | Icon opacity. This let me interpolate the icon opacity on a per-tile basis. |

It was very useful that the data I needed for each shader stage fit inside of a single texture. This let me get away with a single texture lookup for this data. I will let the shader code explain how the data is used.

v2f vert (appdata v)

{

v2f o;

// Get the information from the data texture.

float4 vertData = tex2Dlod(_DataVert, float4(v.uv1.xy, 0, 0));

// Tile size bonus. This should probably be turned into a shader uniform.

float size = (vertData.r * .2f);

// Use the 0-1 UV space in the uv0 channel to push the quads vertices outwards.

// Displacement based on size value from above.

v.vertex = v.vertex + float4(((v.uv0 - float2(.5, .5)) * (size)).xy, -size, 0);

o.vertex = UnityObjectToClipPos(v.vertex);

// Shift the 0-1 range over to cover the correct tile icon.

// The icon sheet is 8x1, so we just multiply the regular 0-1 uvs .125 to fit properly.

o.uv0 = float2((v.uv0.x * .125) + vertData.g, v.uv0.y);

o.uv1 = v.uv1;

return o;

}fixed4 frag (v2f i) : SV_Target

{

// Do our texture lookup once.

// To figure out what color the tile should be, as well as the icon's opacity.

float4 fragData = tex2D(_DataFrag, i.uv1);

// Properly blend the color and icon.

return 1 - (1 - fixed4(fragData.rgb, 1)) * (1 - (tex2D(_IconSheet, i.uv0) * fragData.a));

}As you can see the shaders don’t actually do any of the animation work. That is done on the CPU side at the end of every frame.

I keep three copies of the texture data around on the CPU side. One for the final data that is committed to the GPU every frame, one for the interpolation start and one for the interpolation target values.

This allowed me to iterate over flat arrays, something that’s super quick to do.

The code simply decrements the interpolation delay and value appropriately using Time.deltaTime, and then interpolates between the start and target values from their respective arrays based on the interpolation value.

I iterate over the entire array, and after these values are all updated the final data is applied to the vertex and fragment data textures.

These data textures and the backing data arrays that I keep around are both completely static. They never get discarded, resized, or created anew after the game starts. Furthermore the iteration code is basically free of any allocations. This results in pretty much zero allocations every frame. As you have seen earlier that means we don’t encounter any massive garbage collections that take forever.

Now, the current implementation still has a couple of things that I would like to change.

- Only iterate over the area used by the currently selected grid size. Should be an easy change to the update loop. It’s just been so quick I haven’t felt the need to just yet.

- I don’t use the blue and alpha channels in the vertex shader, so I could cut down memory usage a bit if I switched to two channel textures instead for the vertex data.

- Pull the constants defined in my shader code into uniforms. This would allow me to change them without having to dive into the shader code again. It wouldn’t really change anything, I just don’t like keeping magic numbers around.

- Use this information to cut down the memory usage some more. Again, it wouldn’t really change all that much. It’s just cleaner to use the right values for this. It’s not like I need 64 bits of memory in my

float2uv channels to define a 0 and 1 for one of the uv channels.

Don’t stop there!

Mobile devices are almost always capped at 60fps, even if you tell Unity or whatever you use to not use vsync. Instead of continuously rendering new frames your phone just waits until it’s time to get back to work. During this time your phone uses significantly less power than while it’s actually working on a new frame.

If your game runs at 60fps you are still not done. There is a big difference between your game running at 70 versus 1000fps. Both will only run at and display 60fps, but will be spending either the vast majority of the time working, or barely any time at all. Consider the impact your game has on your users’ battery levels when deciding if you’re done optimizing!